To derive maximum benefit from system virtualization, storage virtualization is a necessary prerequisite. The mobility of a virtual machine (VM) and its data plays an important role in daily operational tasks from load balancing to system testing. That makes a SAN essential to leverage all the advanced features of a VMware Virtual Infrastructure (VI) environment. The issues of availability and mobility, however, really rise to the forefront in a disaster-recovery scenario.

While seldom an issue in data centers, the ability to harness the synergies of system and storage virtualization is often a major hurdle for IT administrators at smaller companies or remote branch locations. To remove that stumbling block, LeftHand Networks has introduced the Virtual SAN Appliance (VSA) for VMware ESX. In a few simple steps, administrators can employ two or more VSAs to create a fully redundant iSCSI SAN using previously isolated direct-attached storage (DAS) on ESX servers.

LeftHand’s VSA opens the door for SMBs and remote office sites to create and manage a secure, cost-effective, and fail-safe iSCSI SAN by simply leveraging existing DAS on ESX servers. Moreover, for cost-conscious, risk-averse IT decision-makers, there are two critical distinctions of LeftHand’s VSA:

- This VMware appliance is a version of SAN/iQ, a storage software platform built on the Linux kernel. It is not an application running on a commercial OS that requires an additional license; and

- The appliance is designed to be clustered using multiple ESX servers for the iSCSI SAN to withstand the loss of one or more servers.

Infrastructure virtualization became a critical focus area for CIOs because it eliminates the constraints of physical limitations from IT devices. By separating a resource’s function from its physical implementation, system administrators can build generic resource pools based on functionality and manage any member in the same manner. That opens significant opportunities to reduce IT operating costs and increase resource utilization. In particular, administrators can configure OS and applications software of a VM as a template to simplify and standardize the provisioning of system configurations.

More importantly, storage virtualization in a VMware Virtual Infrastructure (VI) environment is far less complex than storage virtualization in a typical Fibre Channel SAN with physical systems. A standard OS, such as Windows or Linux, assumes exclusive ownership of storage volumes. For that reason, the file systems for a standard OS do not incorporate a distributed lock manager (DLM), which is essential if multiple systems are to maintain a consistent view of a volume’s contents. That makes volume ownership a critical part of storage virtualization and explains why SAN management is the exclusive domain of storage administrators at most enterprise-class sites.

This is not the case for servers running ESX. The file system for ESX, dubbed VMFS, handles distributed file locking, which eliminates exclusive volume ownership as a burning issue. In addition, the files in a VMFS volume are single-file images of a VM disk—making them loosely analogous to an ISO-formatted CDROM. When a VM mounts a disk, it opens a disk-image file, VMFS locks that file, and the VM gains exclusive ownership of the disk volume. With volume ownership a moot issue, iSCSI becomes a perfect way to extend the benefits of physical and functional separation via a cost-effective lightweight SAN.

To support a VMware VI environment, openBench Labs set up two quad-processor servers: a Dell PowerEdge 1900 and an HP ProLiant DL580 G3 as our core ESX servers. These servers would run multiple VMs with either Windows Server 2003 SP2 or Novell SuSE Linux Enterprise Server 10 SP1 as their operating system. For our initial SAN/iQ cluster, we ran a LeftHand VSA on our Dell PowerEdge 1900 and an HP Proliant ML350 G3.

Each VSA gets its functionality by running the latest version of the LeftHand SAN/iQ software within a VM. Our test scenarios focused on ease of use, performance, and fail-over with both two and three VSAs forming a cluster. We focused on testing flexibility because a key component of the SAN/iQ value proposition is automation of system management tasks. A heterogeneous cluster will test the ability of SAN/iQ to aggregate disparate devices into a simplified, uniform virtual resource pool. Nonetheless, a homogeneous cluster will provide a virtualized environment with more-predictable performance characteristics.

In either case, the SAN/iQ virtual environment includes thin provisioning, synchronous and asynchronous replication, automatic fail-over and failback, and snapshots. Administrators access and manage all of these functions via a Java-based Centralized Management Console. Nonetheless, what distinguishes the software is the degree to which SAN/iQ simplifies the configuration and management of a highly available iSCSI SAN. Using software modules, dubbed managers, SAN/iQ automates communications between VSAs, coordinates data replication, synchronizes data when a VSA changes state, and handles cluster reconfiguration as VSAs are brought up or shut down.

Even more important for SMBs and branch offices, SAN/iQ simplifies the configuration and management of storage for its iSCSI SAN. The strategy employed by SAN/iQ to accomplish SAN simplification is to eliminate traditional administrator tasks via automation within the VSAs. The extensive use of automation within SAN/iQ goes as far as entirely handling I/O load-balancing, which is critical for cluster scalability.

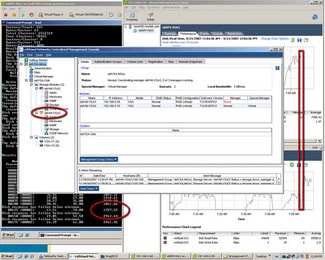

The SAN/iQ Central Management Console provides a single-window view into SAN storage that is hierarchical in structure. Under this scheme, VSAs are federated to simplify the notion of management by policy. At the top of the hierarchy is the Management Group, which integrates a collection of distributed VSAs within a specific set of security and access policies. By allowing administrators to dynamically add or delete a VSA, a Management Group creates a federated approach to data integration, pooling, and sharing.

Within a Management Group, VSAs can be grouped into a storage cluster for the purpose of aggregating local storage into a single, large virtualized storage resource for an iSCSI SAN that is compliant with the policies of the Management Group. The goal is to lower the cost of storage management by hiding all of the complexities associated with the physical aspects of DAS such as data location and local ownership. Using the SAN/iQ console, we were able to log into our site’s Management Group and gain complete access to our storage cluster and each VSA in our cluster. More importantly, by virtue of the SAN/iQ hierarchy, we were able to configure and manage VSAs holistically.

We initially created a SAN/iQ Management Group and cluster with two VSAs—one on the HP ProLiant DL580 and the other on the Dell 1900. To automate decision-making, SAN/iQ implements a voting algorithm that requires a strict majority of managers, dubbed a quorum, to agree on any course of action. That scheme required us to activate a manager on each VSA as well as a third manager to have a “quorum” of managers.

The easiest way to add that the manager was to put a Virtual Manager, which was running on one of the ESX servers, into our Management Group. A more robust solution would be to run LeftHand’s Failover Manager, which is simply a VM with just the SAN/iQ manager module within a VMware player on an existing Windows server.

What enables the advanced automation implemented by SAN/iQ is its method for storing data in clusters. The first indication of this scheme becomes visible when the total storage supported by a cluster is not the strict sum of the available DAS at each of the VSA storage modules in the cluster.

As VSAs join to form a cluster, SAN/iQ examines the total amount of DAS discovered at each ESX server host. SAN/iQ then automatically creates a cluster-wide storage pool in which each VSA module is allocated the same amount of disk space equal to the smallest amount of storage found in any individual VSA pool.

When an administrator creates a virtual disk volume in a cluster, SAN/iQ apportions the disk image file to equally sized subdisks residing on each of the VSA storage modules in the cluster. As a result, all I/O commands associated with that volume are spread across all of the VSAs in the cluster. This scheme provides maximum scalability for the cluster with no intervention or tuning from administrators. What’s more, whenever a new VSA joins a cluster or an existing VSA is removed, SAN/iQ immediately restructures all of the cluster volumes as a background task.

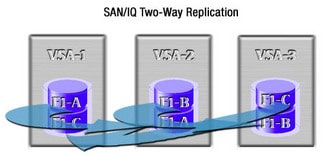

To provide for high availability, an administrator can assign or change the replication level of a volume: none, two-, three-, or four-way replication. With two-, three-, or four-way replication, each VSA replicates its portion of a volume onto one, two, or three adjacent nodes in the cluster. That means each member of the cluster stores a distinct subset of two, three, or four subdisk regions. In turn, one, two, or three cluster members could fail and the entire volume would still be available online.

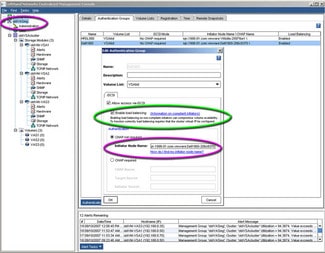

Once these are created, administrators can easily handle the presentation of virtualized volumes to hosts by creating Authentication Groups setting security policies for the virtualization of target volumes within a Management Group. Virtualization rules can be based on the unique ID of the client’s iSCSI initiator or the Challenge-Handshake Authentication Protocol (CHAP). More importantly, the SAN/iQ VSAs create a full-featured, general-purpose iSCSI SAN. Any system with LAN connectivity can be a SAN client. IT can even leverage this SAN to centralize storage for all local desktop machines in addition to any ESX servers that are not part of the Management Group.

Our next step was to grant iSCSI access rights to all of our ESX servers—or any other systems—to the logical volumes that were being exported by the VSA cluster. We were then able to import those volumes on any ESX server for use by any of its VMs. On each ESX server, we essentially re-imported storage that initially had been attached, configured, and orphaned on a single ESX server.

The foundation for this networking legerdemain is a virtual switched LAN created within each ESX server to handle all networking for hosted VMs. On each ESX server, the foundation of the virtual switch was a pair of physical Ethernet TOEs, which were teamed by ESX. All VMs, including our VSAs, connect to the virtual switch via virtual NICs. We also needed to create a virtual NIC for the ESX server and a port utilized by the ESX kernel to make iSCSI connections via VMware’s initiator software.

To lessen the immediate impact of storage provisioning with replication, SAN/iQ supports full and thin provisioning. Full provisioning reserves the same amount of space on the SAN that is presented to application servers. On the other hand, thin provisioning reserves space based on the amount of actual data, rather than the total capacity that is presented to application servers.

The traditional advantages for thin provisioning rose out of the fact that expanding volumes on Windows and Linux servers was once a very daunting task. Thin provisioning at the SAN avoided all the expenses related to either immediately over- provisioning storage or a later overhauling of an OS. While advances in logical volume support within Windows and Linux now mitigate the impact of expanding volumes within an OS, system virtualization greatly expands the matrix of mappings from all of the systems to all of the physical servers on which those systems may run. As a result, thin provisioning at the SAN level becomes absolutely essential in a Virtual Infrastructure environment.

As with load balancing, SAN/iQ fully supports dynamic allocation of storage resources by automatically growing logical volumes from a pool of physical storage. For dynamic growth, thin provisioning is activated for any logical drive using a simple checkbox. Once set, SAN/iQ automatically handles all of the details and maps additional storage blocks on-the-fly as real usage warrants the growth of a logical drive. From a storage management perspective, it is only necessary to ensure that an adequate number of DAS drives are available from which physical blocks can be allocated as needed to logical blocks.

Test results

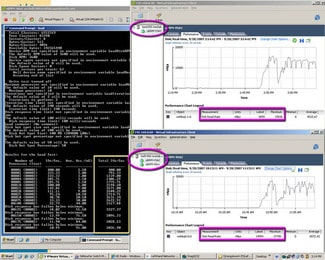

To test both the functionality and the performance of the VSA-supported iSCSI SAN, we ran our oblLoad benchmark, which stresses the ability of all storage and networking components in a SAN fabric to support rapid responses to excessively high numbers of I/O operations per second (IOPS) within Windows Server 2003 VMs on each of our core ESX servers. The oblLoad benchmark stresses data access far more than it does data throughput, which, in the context of iSCSI command processing, creates much more overhead for all SAN components than a sequential throughput test.

We measured the results within the test VM and also measured the performance of the three VSAs via VMware’s VI Console client. Performance for oblLoad was dependent upon:

- The number of drives in each server’s disk array—four 15,000rpm Ultra320 SCSI drives on the HP ProLiant ML350 server and six 10,000rpm SATA drives on the Dell 1900 server; and

- The caching capabilities of each server’s storage controller—an HP SmartArray with a 128MB cache and a Dell PERC 5i with a 256MB cache.

We began the process using a VM running Windows Server 2003 on our Dell PowerEdge 1900. Quickly it became clear we were not stressing the limits of our iSCSI cluster, but rather the limits of the local storage systems. In particular, the VSA on the HP ProLiant ML350 G3, which was configured with half of the controller cache and 67% of the drive spindles of the Dell PowerEdge 1900, was about 50% slower processing its half of the logical volume.

Clearly, the implication was that the HP server was slowing down cluster performance. To verify that hypothesis we used the VMware VI console to suspend processing of the VSA running the HP ML350 server while the VM on the Dell ESX server was running oblLoad. In essence, we created a VSA failure.

In theory, at the point of VSA failure the remaining two managers should confer and move all processing for the logical drive to the remaining VSA on the Dell server. Also, now that the cluster was entirely dependent on the Dell server with its more-robust storage subsystem, I/O processing should have a measurable improvement.

In practice, that is exactly what happened as we jumped from about 2,000 IOPS to 3,000 IOPS. More importantly, all of that happened automatically with no intervention on our part. Through the creation of a management hierarchy and an automation scheme within that hierarchy, SAN/iQ creates a high-availability scenario that can easily be managed by system administrators in an SMB IT environment or via a lights-out management hardware located at a remote branch office.