Most IT managers consider virtual operating environments (VOEs), such as the VMware ESX infrastructure, to be the magic bullet for lowering the cost of IT operations. To realize that opportunity, however, IT must be ready to handle scalability on two dimensions: virtual machines (VMs) must scale to support I/O- or compute-intensive applications, and VOE servers must scale to support a growing load of VMs.

For a SAN administrator, a VOE initiative radically changes the source of change on a SAN fabric as changes in Fibre Channel (FC) infrastructure will now originate at the edge of a SAN fabric: Servers in the data center, rather than storage arrays, drive the new business model.

When resource-intensive applications are deployed on a VM, these applications generate an overwhelming amount of data traffic and stress the underlying VOE storage system in terms of capacity, throughput, and availability. That pegs the scalability of a VOE on the number of VMs running on each VOE server and the impact of a VOE server on the successful consolidation of SAN connections.

To handle all of the dimensions of I/O demanded in a VOE, QLogic has introduced a portfolio of 8Gbps FC infrastructure solutions. At center stage is the QLogic 2500 Series of FC host bus adapters (HBAs) and the SANbox 5800 Series Stackable Switch with 20 8Gbps FC ports and four 20Gbps stacking ports.

For a fast payback, QLogic’s 8Gbps SAN infrastructure can make just as big an impact on VOE management simplification as it does on I/O throughput performance. By providing two 8Gbps ports on a single HBA, the QLogic 2500 HBAs provide enough bandwidth on a single VOE server to provide eight VMs with the equivalent bandwidth of eight physical servers with dedicated 2Gbps HBAs. That opens the door to complete VM mobility among VOE servers, which is essential for IT to balance workloads and maximize the utilization of resources.

Equally important is the support for new levels of device virtualization in QLogic’s portfolio. As the pace of VOE adoption quickens and VMs become the deployment method of choice for business-critical applications, the ability to track I/O resource utilization within a SAN will be critical for IT. A key criterion for any IT system virtualization or consolidation initiative is a shared storage infrastructure that scales rapidly and efficiently. Such a shared infrastructure permits the movement, control, and balancing of workloads and data.

To assess the impact of QLogic’s 8Gbps FC SAN infrastructure on both the functionality and scalability of a VOE, openBench Labs used VMware ESX 3.5 to set up a test scenario for VOE scalability.

For the foundation of our VOE physical infrastructure, we used a multiprocessor Dell PowerEdge 6850 with four 3.66GHz Intel Xeon CPUs as the host server. We then wove a SAN fabric using a 4Gbps QLogic QLE2460 HBA, an 8Gbps QLE2560 HBA, and a SANbox 5802V switch. For fast storage arrays, we employed two Texas Memory Systems RamSan-400 solid-state disk (SSD) arrays. Each of these SAN storage systems had four controllers with two 4Gbps FC ports. Finally, we configured eight VMs, on which we ran the oblLoad and IOmeter benchmarks on Windows Server 2003.

For encountering potential SAN bottlenecks, our openBench Labs VOE scalability scenario represents an absolute worst-case. We deliberately designed our fabric topology to put maximum stress on the QLogic QLE2560 HBA and SANbox 5802V switch by employing only one FC switch and converging eight 4Gbps data paths on to the one 8Gbps HBA. As a result, sources of performance and scalability bottlenecks would be limited to SAN transport and VOE issues.

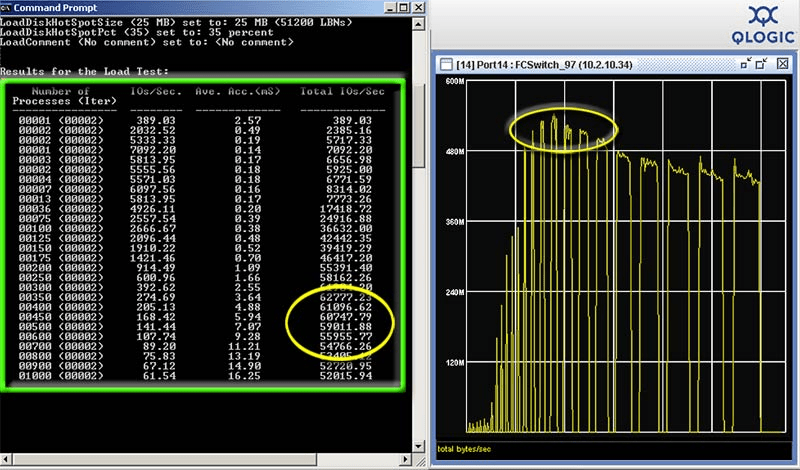

Having created our first logical drives, we ran oblLoad, which generates transactions in a simulated database pattern. Using 8KB reads, we processed more than 60,000 I/O requests per second.

To derive maximum benefits from a VOE, a shared-storage SAN is essential. VM load balancing and high availability is pinned to the construct of VM mobility via software, such as VMotion. As a result, VOE host servers must be capable of accessing the same storage in a way that does not negatively impact any service level agreements (SLAs) associated with the VM.

Complicating the issue, virtualization of VM storage devices has traditionally focused on simplifying system management on the VM by conceptually isolating all disk resources as direct-attached storage (DAS) devices. While the simplicity of the DAS-device virtualization model is ideal for many initial VM projects, it is very problematic for projects that involve consolidating large-scale enterprise applications that have been designed to run on systems within a SAN environment.

First, that model for system virtualization makes the small number of VOE servers responsible for ensuring the I/O levels of all of the VMs, each of which has likely been resident on a 1U server with a dedicated 2Gbps FC SAN connection. With the mobility of VMs among servers a key to the value proposition of a VOE, it is essential that HBA performance keep pace with the performance of multi-core CPUs, which makes it very easy to support eight to sixteen VMs on a single server.

Second, there is a need for IT to resolve multiple operational issues, such as SAN resource accounting and zone-based device access, as physical systems are migrated to VMs in a VOE. As a VM, a system designed to operate in a SAN will now be shielded from fabric infrastructure. That burdens IT with all of the tasks needed to modify existing operations, procedures, and reporting with regard to storage resources. Planning for and executing those tasks can easily stymie the migration of physical systems into a VOE.

Within a VOE based on version 3.5 of VMware ESX, administrators configure all FC HBAs as devices that belong exclusively to the ESX server. That’s because all storage on a VM is virtualized in a way that represents every logical volume as a direct-attached SCSI disk drive.

Typically, that logical volume is hosted in a VMware File System (VMFS) volume dubbed a datastore with a .vmdk extension identifier. Significantly, VMFS provides distributed locking for all of the VM’s disk files. With distributed locking, a datastore, which can contain multiple VM disk files, can be accessed by multiple ESX servers. The alternative is to use a raw LUN formatted with the VM’s native file system, which requires a VMFS-hosted pointer file to redirect requests from the VMFS file system to the raw LUN. This scheme is dubbed Raw Device Mapping (RDM).

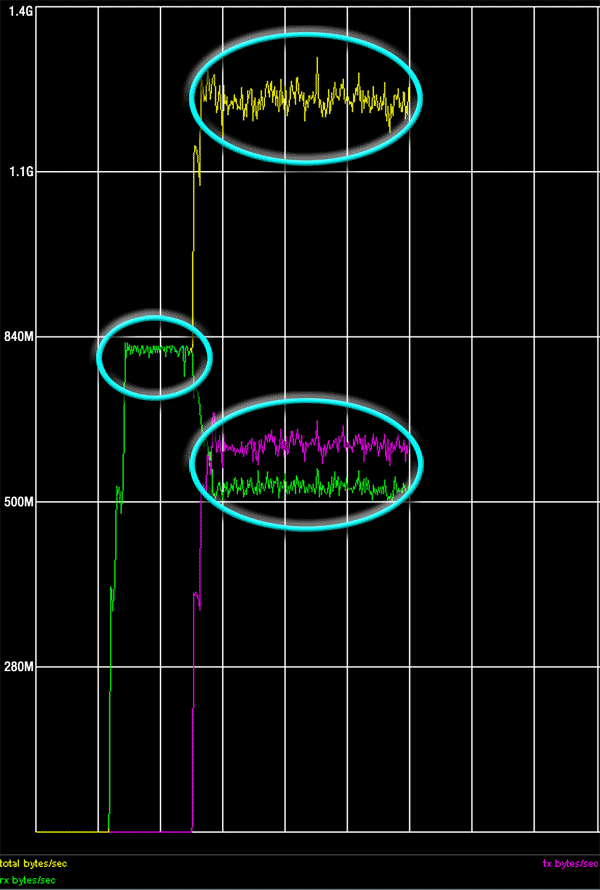

We used IOmeter to drive I/O read throughput on the ESX server from eight VMs. Using the QLE2560 HBA, total I/O throughput converged on theoretical wire-speed—about 800MBps. At the same time, internal I/O balancing had each VM converging on 100MBps. That level of I/O is very consistent with the read throughput typically exhibited on physical machines connected to FC arrays with SATA or SAS drives. On the other hand, read throughput for our ESX server was limited to 400MBps using the QLE2460. That limited I/O throughput on each VM to about 50MBps.

From the perspective of a VM, the construct of a SAN has not existed until now. For SAN objects, such as switches, HBAs, and storage devices, a World Wide Name (WWN) identifier is needed to uniquely identify the object within an FC fabric. Without a unique WWN, a VM can only access a SAN storage LUN using the WWN of a physical HBA installed in the host VOE server. For all practical purposes, that has made a VM invisible to a SAN.

For a VM, the first inroad into the realm of storage networking comes via the introduction of FC port virtualization. N_Port ID Virtualization is an ANSI T11 standard that is commonly dubbed NPIV. Version 3.5 of VMware ESX introduces support for NPIV-aware SAN switches and HBAs. An ESX administrator can now generate a WWN identifier set, which contains a World Wide Node Name (WWNN) and four virtual World Wide Port Names (WWPNs) for physical FC HBA ports.

Virtual WWPNs can be assigned to any VM that uses raw device mapped (RDM) disks. When that VM is powered on, the VMkernel attempts to discover access paths to SAN-based LUNs. On each NPIV-aware HBA where a path is found, a unique virtual port (VPORT) is instantiated. That VPORT functions within the SAN infrastructure as a virtual HBA installed on the VM.

Once we assigned a virtual port WWN to a VM, the NPIV-aware QLogic SANbox 5802V switch immediately recognized the new Port WWN. The switch then associated both the original QLogic HBA Port WWN and the new VMware virtual HBA Port WWN with the same physical port on our NPIV-aware switch.

From the perspective of scalability, an 8Gbps FC infrastructure can provide an immediate payback on investment. What makes this leap in bandwidth so important is the rapid acceleration witnessed in server capabilities. With the introduction of quad-core processors and fast PCI-e buses for peripherals, it’s not uncommon to find commodity servers hosting eight or more VMs.

In that kind of environment, an 8Gbps infrastructure can immediately help optimize the utilization of storage resources by avoiding the extra costs associated with the provisioning of multiple HBAs and switch ports for a VOE server. A single dual-ported 8Gbps QLE2560 HBA can provide an ESX server with enough I/O bandwidth needed to support eight VMs, with each VM garnering the bandwidth equivalent of a dedicated 2Gbps FC connection.

In testing throughput, we first wanted to determine the amount of I/O traffic that a single VM could generate with respect to the bandwidth of an 8Gbps QLogic HBA. Second, we wanted to determine how well one active port on the HBA could satisfy the total I/O demand from our VOE host server. To measure specific performance in these tests, we used the QLogic Enterprise Fabric Suite to monitor all traffic at each port on the SANbox 5802V.

We began by testing whether a single VM could generate sufficient I/O traffic to justify using the QLogic 2560 HBA. Using two RDM disks imported from the TMS SSD, we launched our oblLoad benchmark. Using 8KB I/O requests, we were able to process more than 60,000 I/Os per second (IOPS) on the VM. That magnitude of the I/O requests processed by the VM has profound implications.

The number of IOPS sustained in our test clearly indicates that a VM has the ability to scale I/O-intense applications, such as Exchange, SQL Server, and Oracle. Equally important, our VM read data at more than 500MBps while processing that I/O load. Significantly, that level of throughput is 25% greater than the maximum level sustainable by a single FC port on a 4Gbps HBA such as the QLE2460. In other words, a 4Gbps HBA in our host server would limit the I/O throughput level a VM could achieve.

Our other performance goal was to measure the potential of an 8Gbps SAN infrastructure to scale a VOE and raise the level of storage resource utilization for multiple VMs. To take a closer look at the limitation in I/O performance uncovered in our initial test of a single VM, we used both an 8Gbps QLE2560 and a 4Gbps QLE2460 to handle the I/O traffic generated by eight VMs and eight logical drives on multiple TMS SSD arrays. The RamSan-400 arrays were an ideal means for presenting the ESX server with a SAN environment having multiple 4Gbps devices.

For these tests, we configured the two ports on each HBA as an active-passive fail-over pair. We began by running one worker process that read I/O on each of the eight VMs. During the tests, we increased I/O block sizes over fixed-time intervals. Given our test configuration, total theoretical read throughput for the ESX server was limited to 800MBps using the QLE2560 and 400MBps using the QLE2460.

Using IOmeter to drive 32KB reads on two logical SSD-based disks, we brought total read I/O throughput to over 800MBps. Adding two 32KB write I/O processes resulted in more overhead to our ESX server. While throughput for writes hovered around 700MBps, throughput on reads slipped to just over 600MBps. Nonetheless, total overall I/O throughput for simultaneous reads-and-writes was about 1.7 times greater than the theoretical limit for a 4Gbps HBA in our environment.

Turning attention back on individual VMs, internal load balancing by ESX Server had throughput for each VM converging on 100MBps with the QLE2560. Using the QLE2460, however, limited individual VMs to 50MBps as I/O block size increased. Once again, with 8KB reads we saw a distinct break in performance. Using the QLE2560, our eight VMs on the VOE server were reading more than 50MB of data per second and were responsible for a total I/O load that exceeded 400MBps–a result that closely matches what we measured running the oblLoad benchmark with one VM. What makes this result all the more significant is the fact that Windows systems typical reach I/O throughput levels of 110MBps to130MBps reading data from RAID-5 arrays built on SATA or SAS disk drives.

In our final I/O stress test, we targeted the ability of one active port on the 8Gbps QLogic 2560 HBA to handle multiple read-and-write I/O streams, from multiple storage arrays, with multiple 4Gbps connections. For this test, we configured four VMs, each of which accessed a dedicated RDM volume on one of two SSD arrays. On two of the VMs, we ran an IOmeter worker process that read data using 32KB I/O requests. On the other two VMs, we employed IOmeter worker processes that wrote data to RDM volumes using 32KB I/O requests.

Once again, the two VMs running IOmeter read processes were able to push our host ESX server to sustain I/O throughput at over 800MBps. We then launched the IOmeter write processes on the remaining two VMs. With multiple VMs simultaneously reading and writing data to and from multiple storage arrays within the SAN, our host server with a QLogic QLE2560 HBA sustained a total full-duplex I/O level that was about 1.7 times greater than the maximum bandwidth that could be sustained with a 4Gbps HBA. From another perspective, a single 8Gbps HBA with dual FC ports provides a single host VOE server with the ability to provide eight VMs with the I/O bandwidth of eight 2Gbps HBAs