Yesterday’s article explored the history of InfiniBand and its recent breakthrough into enterprise network storage. Today we look at product offerings and the future challenges that face InfiniBand.

Cisco’s acquisition of Topspin for $250 million in April 2005 also fueled interest in InfiniBand. In a brief on InfiniBand’s impact on Cisco’s switch offerings, Brian Garrett, an analyst with the Enterprise Strategy Group, noted: “Storage switching connects servers to storage systems and devices on a shared network. …Cisco Server Fabric Switching (SFS), based on Topspin’s InfiniBand technology, connects servers within a cluster, enables shared connection to storage, and provides a platform within the network for the virtualization of storage and server resources.”

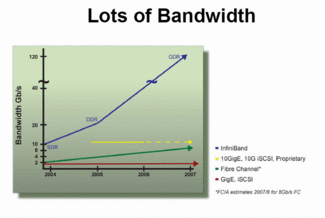

In an interview, Garrett says, “Topspin provided the HCA [host channel adapter] that connects to Cisco’s switch. This turns the InfiniBand ‘fat pipe’ into multiple pipes—one for connecting servers, one for connecting to storage, and one for bridging over to Ethernet for external storage. Right now the switches are running at 10Gbps, and 30Gbps is becoming available. Companies can now use InfiniBand as a direct connection to storage. This is good for HPC applications that move massive amounts of data to storage as quickly as possible, such as data collection from satellites.”

As for InfiniBand storage arrays, Jonathan Eunice, president of the Illuminata research firm, considers Engenio and Texas Memory Systems to be early players. “Texas Memory’s solid-state disk [SSD] and Engenio’s [high-speed] disk subsystems are oriented toward some of the technical computing arenas where InfiniBand is gaining attention,” he says.

Texas Memory offers an InfiniBand interface that allows its SSD devices to connect to HPC networks. Its 4X InfiniBand interface and a single RamSan SSD provides up to 3GBps of sustained throughput over four ports. The InfiniBand interface allows the RamSan devices to connect natively to the high-bandwidth, low-latency servers used in grid computing environments.

LSI Logic’s Engenio division introduced storage systems with native InfiniBand at the Supercomputing conference in November 2005. Engenio’s 6498 controller and 6498 storage system are sold through OEMs and can be configured with either Fibre Channel or Serial ATA (SATA) disk drives.

“InfiniBand is becoming the interconnect of choice for inter-processor communications in Linux clusters,” says Steve Gardner, director of product marketing at Engenio. “Those customers started asking why they have to build a separate network for storage with Fibre Channel infrastructure, switches, and HBAs or buy converters that perform Fibre Channel-to-InfiniBand conversion. Both choices increase complexity and impact network performance.”

SGI and Verari are two of Engenio’s InfiniBand system OEMs. SGI resells Engenio’s InfiniBand disk arrays as the InfiniteStorage TP9700. Verari, a blade server developer, uses Engenio’s technology in its VS7000i InfiniBand-attached storage system.

Isilon is taking the concept of server clusters to storage environments, offering InfiniBand connections as options on its storage arrays. “Clustered storage is built from the ground up to store large amounts of unstructured data, digital content, and reference information,” says Brett Goodwin, vice president of marketing and business development at Isilon. “We teamed with Cisco to create a storage system that uses InfiniBand as a high-performance, low-latency cluster interconnect.”

Goodwin reports that since Isilon first introduced its IQ series of storage systems with InfiniBand connections, in April 2005, about 90% of its customers have opted for the InfiniBand option (as opposed to Gigabit Ethernet) for node-to-node connectivity. Isilon offers the InfiniBand version of its IQ clustered storage systems at the same price as the Gigabit Ethernet version.

DataDirect Networks delivers more than 3GBps of read/write performance in its InfiniBand-based RAID storage networking appliance, the S2A9500. Designed for HPC and rich media applications, the S2A9500 can deliver 360 teraFLOPS and 1 petabyte of SATA storage.

Germany-based Xiranet Communications recently introduced its XAS 500-ib InfiniBand storage system, which is based on the SCSI RDMA Protocol (SRP) and offers capacity up to 7.5TB.

In October 2005, Voltaire and FalconStor teamed up to combine iSCSI management capabilities with the high performance of InfiniBand to enable accelerated backup and data replication.

In November 2005, Microsoft jumped into the market when it announced InfiniBand support for HPC environments with its Windows Compute Cluster for Windows Server 2003 software.

Hurdles Remain

One of the hurdles that InfiniBand faces is that it can’t be used over long distances. However, like Fibre Channel, it can be bridged. The distance limitation might not be an issue for server environments, but as the technology moves into storage the ability to transport large amounts of data across long distances becomes more critical.

Obsidian Research Corp., a Canadian InfinBand technology company, is developing long-range storage technologies with the US government. Obsidian has developed a 2-port, 1U box called Longbow XR that encapsulates InfiniBand traffic in a variety of WAN links. In one demonstration, it linked remote clusters over OC192c SONET networks at up to 6,500km.

The Open IB Alliance also addressed the distance issue when it showcased the world’s largest cross-continental InfiniBand data center in conjunction with the Supercomputing 2005 conference. The project included InfiniBand clusters in California, Washington, and Virginia connected over a WAN with Obsidian’s Longbow XR devices.

Another major challenge that InfiniBand faces is the foothold that Fibre Channel and Ethernet already have in storage networking.

“Fibre Channel and Ethernet are entrenched,” says Illuminata’s Eunice, “but they can’t carry the traffic that InfiniBand can and do not have the low latency.”

VENDORS MENTIONED

Cisco, DataDirect Networks, Dell, Engenio, FalconStor, Hewlett-Packard, IBM, Isilon, LSI Logic, Mellanox, Microsoft, Network Appliance, Oracle, PathScale, Rackable Systems, Silicon Graphics, SilverStorm Technologies, Sun, Symantec, Texas Memory Systems, Verari, Voltaire, Xiranet Communications